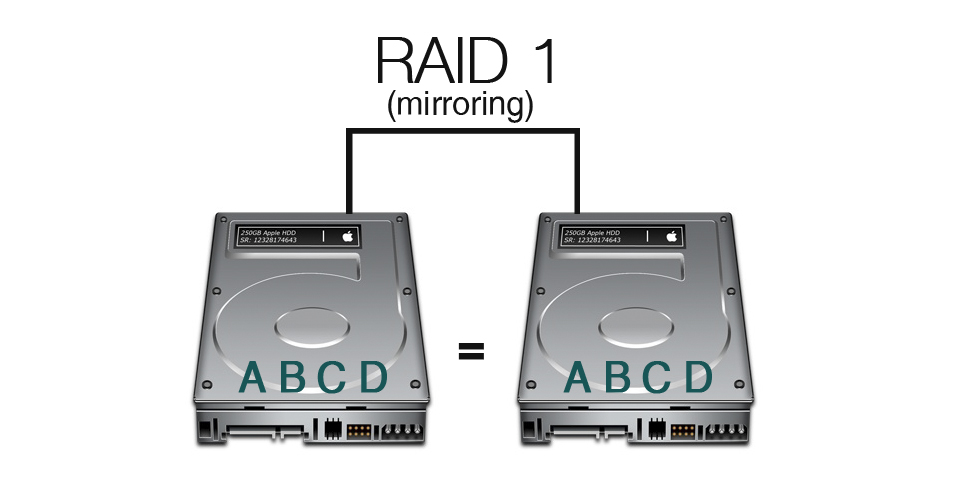

At work, we have a number of servers which use the default windows software RAID implementation to insure OS disk redundancy (RAID 1). whether Software RAID 1 is a good solution for the situation is debatable, but this is not part of the scope for this article.

One Thursday morning I noticed one of the OS disks on the SQL server was showing up as missing in Windows disk manager. The server was still running fine, this is why we use RAID 1 in the first place. Of course the defective drive needed to be replaced as soon as possible. So I went out, bought a new drive and told my colleagues the system would not be available in the evening.

Normally, swapping a drive is super easy. You just replace the defective drive, tell windows to drop the missing drive from the mirror and create a new mirror with the still working drive and the new drive.

Unfortunately Windows disk manager came up with the following error after trying to create a new mirror: “All disks holding extends for a given volume must have the same sector size, and the sector size must be valid.“. bummer, after some googling it became apparent it would not be possible to create a mirror using this combination of disks. Luckily it would be able to clone the old disk to the new disk. Clonezilla to the rescue, or not…

After booting from my Clonezilla thumb-drive and walking trough the disk cloning wizard I got an error stating that the disk could not be cloned to the new drive because the new drive is 5MB smaller. Well that’s not great news, but I could still clone the separate partitions and fix the MBR using the windows installer thumb-drive. So I booted back in to windows, shrunk the data partition about 100MB, booted back in Clonezilla and started the partition clone. About one hour later the cloning process was finished. Unfortunately I was unable to fix the MBR because the BootRec utility was not able to find the windows instillation.

At this point it was somewhere around 1 AM, the server in question needed to be working at 7AM so I was beginning to get a little nervous. I could boot the old, working drive and let it run for another day, but whit a 7 year old disk this would be a big risk. so an other solution was desirable.

My next attempt to clone the disk turned out to be a good move. I used a very useful sysinternals utility named Disk2vhd this utility is able to clone a physical disk to a VHD or VHDX it can even backup the OS disk of a system while the system is running, by using shadowcopy. It took about 45 minutes but after that time I had my VHDX file. Unfortunately the utility (Vhd2disk) I used to restore the image to the new disk only accepted VHD files so I needed to run Disk2vhd again. Unfortunately (I use that word often in this post) Vhd2disk was unable to write back to the physical disk. I ran it twice, it crashed both times just before the process ended, my guess is it would not complete for the same reason Clonezilla could not perform a full disk clone.

Just after the second attempt to write the VHD to disk, the old working disk breaks-down. At this point it is around 4AM with 3 hours left and no working drive to fall back to, its time for some drastic measures.

I wrote the VHD containing the os disk (osVHD) to another RAID 1 setup this one mainly contains the database files, being unable to boot the server I took both drives and placed them in separate systems. On the first system I started the import process to our hypervisor cluster (Xenserver), on the second system I started the upload of the SQL databases to a new virtual disk on the main Xenserver cluster. After a short time I noticed this would take to much time, importing the OS disk alone would take over 4 hours, and I had about 2.5 hours left. So I switched tactics, I had a VHD which is the native virtual disk format for windows, including Hyper-V. so I installed Hyper-V on a lab system, connected one of the RAID1 Disks to it and created a new VM with the osVHD, it booted at the first attempt.

Now I only had to get the database files to the new VM, to do this I created a new VHD, mounted it locally and copied all databases to it. Next I attached the VHD containing the databases to the VM and we where back online.

At this point it was just before 7AM and some of my colleges were already entering the office, but the server was working again be it on one (fairly new) disk. Fortunately it was Friday, so it would only need to work for about 12 hours before I would have an entire weekend to migrate the VM to our Xenserver cluster.

That Friday evening I started working towards the migration to the Xenserver cluster. My initial idea was to prepare the VHD for direct import.

First order of businesses was to shrink the OS disk because it would consume 1 TB on the cluster while there was only 200GB in use. Before this could be done the disk needed to be defragmented, after defragmentation the partition could be shrunken down to around 400GB because there was a “unmovable file” in the way (Windows limitation). 400GB was still way better than 1TB but I had only shrunken the partition, the disk size was still 1TB and since Xensever will import the entire disk (even if there is no data on it) this would be a problem.

The process of shrinking the partition took about 4 hours, and required a trip back to the office, because I did not enable remote desktop to the hyper-V server (is was late alright).

luckily there is a solution for every problem. In the old day’s, up until Xenserver 6.2, there was a physical to virtual import utility available, and I still had a copy of it. Of-course it was no longer possible to directly import a “physical” machine to our Xenserver cluster, but it could still export an XVA (Xenserver Virtual Appliance).

After so many problems there was finely a stroke of luck, or not.. During the export the makeshift Hyper-V server gets a BSOD on a Realtek driver. The second attempt (Saturday evening) finishes without errors and the import starts Sunday morning around 8AM. The import finishes around 3PM (still Sunday) unfortunately the newly created VM outputs a BSOD: “STOP: c00002e2 Directory Services could not start….”. Which roughly translates to: “unable to read Active Directory database (NTDS.DIT)”.

After a lot of digging around , I notice the boot driveletter has changed from C to H. After changing the driveletter back to C by following this article form Microsoft, the server starts functioning again :).

At this point it is around 6PM on Sunday. The server is back up, and all applications are working again.

A lot of lessons can be learned from this experience, for example:

- Relying on 7 year old drives for a critical core businesses application is not a good plan. The typical lifespan of a HDD is about 5 years, after this time we need to swap out drives of critical systems.

- New and old drives don’t always mix. Making the translation to virtual first and later trying to restore to physical is a good way to go, especially with older drivers.

- A single physical server is not adequate for a critical core businesses application. Redundancy is important either on the Hypervisor level or at the application level.

image from: https://www.datarc.ru